I’ve been developing software for ages. I’m a backend developer writing mostly C# and yes I like cloud environments and the point where these two meet. Having that said, I need to say that I’m also very charmed by Angular and I like to experiment with it. I have experience with the latest ‘family’ of Angular versions 2 up to version 9 and a little which is the latest version at the time of writing this post. I’ve been involved with a couple of projects running some sort of a backend system (API), consumed by an Angular front-end solution.

Getting started

For me, as a (primarily) Microsoft oriented backend developer, you can start a new Angular project in two ways. One is through Visual Studio, and one is using the Angular CLI ng new command. For me, the first options were of then the way to go, because it creates this nice ASP.NET Core shell around your system allowing me to also add some steps in the HTTP pipeline which was a huge advantage for me. The reason Visual Studio builds the project this way is that it allows you to write your backend controllers and the front-end system in the very same project. I never really understood that decision, because most of the time you’d like to separate these. Angular builds a static single page application website, and your backend is (probably) dynamic and may require some sort of scaling and stuff.

Also, this nice ASP.NET Shell allowed me to host the system fast and easy in an Azure Web App. Just provision a Service Plan with a web app, configure your pipeline and you’re good. This has been the way to go for me for I think six or seven years, maybe even a bit longer. And then I had a chat with Jan de Vries. We were chatting about migrating our blog to the HUGO framework and hosting this on Azure. Jan took this even one step further and added CDN and Cloudflare to this story. If you combine my blog about hosting HUGO in Azure, with Jan’s blog about the same subject with his static website with CDN and Cloudflare blog, you’ll end up with a complete story on how to host a static website (because yes, an Angular website is a static website) in Azure, on Azure Storage with CDN and Cloudflare for almost free and lightning fast!

For demo purposes, I have this website called DayFix. It’s an easy and fast way to make appointments with friends, family or whatever. You can make an event and others can tell if they’ll be there. I’ve re-written the backend for this system to use Azure Functions and table storage. For me, it was time to also update the front-end project which was written in using Angular. I updated the project to Angular 9+ and investigated the hosting options. I decided to give the static hosting an Azure, with CDN and Cloudflare a try.

The pipeline

I removed the ASP.NET Core shell around the Angular project. Basically, I started a new project and migrated everything I need for this new project. To do this, I installed NodeJS 10 and ran npm i @angular/cli -g. This installs the latest version of the Angular CLI. Then I started a new project using the ng new command. I opened both projects (the old project and the new project) in VS Code and started copying stuff over.

== PRO TIP ==

Once I started copying stuff over, I noticed that I sometimes got confused with the environments I was working in. I installed the Peacock extension by John Papa which allows you to color-code your development environment. This way I could easily distinguish the old, from the new environment which saved me a lot of confusion.

Once my project was updated, I was ready to go and change my build & release pipeline. I decided to go for a multi-stage YAML pipeline, which allows me to write the pipeline in code so I have version control. And also allows me to write both my build and deployment at once. This means my pipeline has two stages: Build (compile) and Deploy.

Build

Building or compiling your software system grabs all your code together, validates it and creates deployable files out of it. For Angular, this means bundling javascripts, stylesheets, and HTML, tree-shaking that (removing dead/unused code) then stores this in a certain (configured) location. These files must be deployed by the deployment step. Azure Pipelines use so-called ‘artifacts’ for this. When you publish a pipeline artifact (meaning, storing binary files somewhere), you can use this artifact in other stages of your multi-stage pipeline. So here is my build stage:

jobs:

- job: Build

displayName: Build

pool:

vmImage: "ubuntu-latest"

steps:

- task: NodeTool@0

displayName: "Install Node.js"

inputs:

versionSpec: "10.x"

- script: |

npm install -g @angular/cli

npm install

ng build --prod

displayName: "Build Angular Project"

- task: CopyFiles@2

displayName: "Copy to artifact staging"

inputs:

SourceFolder: "dist/dayfix"

Contents: "**"

TargetFolder: "$(Build.ArtifactStagingDirectory)/Website"

- publish: "$(Build.ArtifactStagingDirectory)/Website"

displayName: "Publish pipeline artifact"

artifact: Website

As you can see, I use the ubuntu (latest) pool to build. This is because Apple and Linux file systems are WAY faster in handling a huge amount of small files (an Angular project) compared to a Windows file system. The steps you need to perform to build your system is installing NodeJS, installing the Angular CLI, restoring npm packages and building the Angular project. The outcome is stored in an artifact staging directory, which is then published. When this stage runs, an artifact is published containing an HTML file, a couple of CSS files and javascripts and (probably) some images files, and fonts and whatever you use in your Angular project.

Note the copy task in this stage, copies from dist/dayfix to the artifact staging directory. This dist/dayfix, or even better, dist/{projectname} is the default target directory for Angular to publish to.

Deployment

Now if you’ve seen my previous post, you should be (at least a little bit) familiar with ARM (or Azure Resource Manager) templates. For the purpose of clarity, I left provisioning the hosting environment out of this blog post. I do want to share how I provisioned my environment. I created a new resource group with a storage account. For the storage account, I left all the defaults as is. Once the creation succeeded, I enabled the ‘static website’ option of the storage account. Now if you enable this, you can set an Index document name and an Error document path. For Angular, I set both to index.html.

Now I told you about this ASP.NET Core shell around the Angular project. Part of this shell is one step in the pipeline that allows your Angular project to run even if the route to a certain file doesn’t exist. To understand this you need to know a little bit about Angular. Angular is a SPA (Single Page Application), meaning there’s only one page (index.html). Portions (or sections) of this page are being replaced depending on the route (address in the browser), or (for example) data received from a server. One mechanism (the Angular Router) takes care of this for you. So if you navigate to https://yourwebsite.com/path/to/a/page this path doesn’t have to exist. Instead, the Angular Router interprets this path and detects that certain components (or page sections) must be loaded and displayed. The above error path helps you to do this.

A short scenario:

A user visits https://yourwebsite/path/page

This path doesn’t exist, so the error path is followed.

Because of the above setting, index.html is loaded.

index.html loads Angular and displays your website.

The Angular Router recognizes the path in your browser and will display the correct components and page sections.

And then I created a CDN Profile, with an endpoint pointing to the primary endpoint of my Azure Storage static website.

jobs:

- deployment: Deploy

displayName: Deploy

environment: "production"

pool:

vmImage: "windows-latest"

strategy:

runOnce:

deploy:

steps:

- task: AzureFileCopy@3

displayName: "AzureBlob File Copy"

inputs:

SourcePath: "$(Pipeline.Workspace)/Website"

azureSubscription: $(ServiceConnectionName)

Destination: AzureBlob

storage: $(StorageAccountName)

ContainerName: "$web"

- task: AzureCLI@1

displayName: "Purge CDN"

inputs:

azureSubscription: $(ServiceConnectionName)

scriptLocation: inlineScript

inlineScript: 'az cdn endpoint purge --resource-group $(ResourceGroupName) --name $(CdnEndpointName) --profile-name $(CdnProfileName) --content-paths "/*"'

Now the deployment itself is very straight forward. It downloads the pipeline artifact and uploads that to a ‘$web’ container in my Storage account. This ‘$web’ container is created when you enable the Static Website option in Azure Storage. Finally, I purge the CDN. A CDN (or Content Delivery Network) is perfect for web content that doesn’t change that much. It caches the files and is therefore capable of serving these files amazingly fast. The downside is, that file changes aren’t detected automatically. Purging the CDN allows you to tell the CDN that files must be removed from memory and that the CDN needs to check for files on the original storage location.

The good news

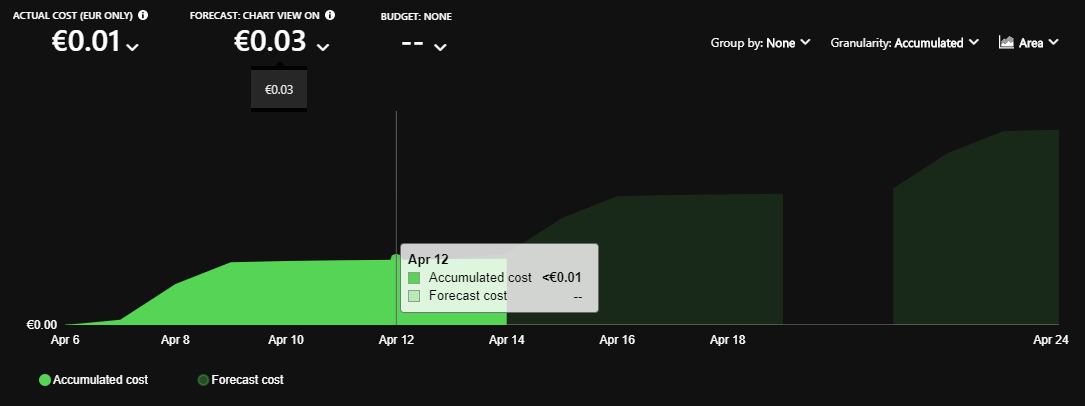

The good news is, that I ran this solution for over a week now, and the actual cost of the entire resource group is still LESS THAN 1 CENT in euro’s. That’s crazy! The forecast for the end of my billing period is 3 euro cents. I truly believe it’s impossible to find a hosting environment as solid, fast and cheap as this one…

The good news is, that I ran this solution for over a week now, and the actual cost of the entire resource group is still LESS THAN 1 CENT in euro’s. That’s crazy! The forecast for the end of my billing period is 3 euro cents. I truly believe it’s impossible to find a hosting environment as solid, fast and cheap as this one…

Last modified on 2020-04-14